Open-Ended Instructable Embodied Agents with Memory-Augmented Large Language Models

Gabriel Sarch Yue Wu Sahil Somani Raghav Kapoor Michael Tarr Katerina Fragkiadaki

Carnegie Mellon University

EMNLP Findings 2023

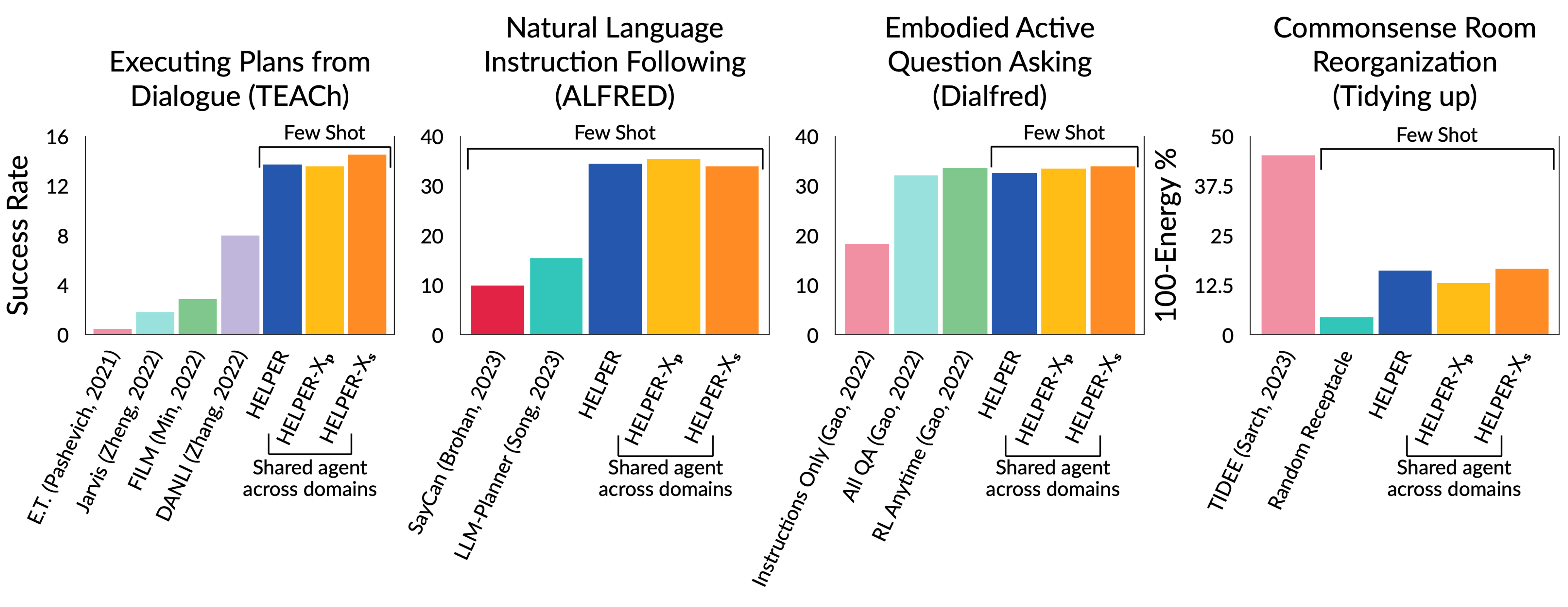

🔥[NEW!] HELPER-X achieves Few-Shot SoTA on 4 embodied AI benchmarks (ALFRED, TEACh, DialFRED, and the Tidy Task) using a single agent, with just simple modifications to the original HELPER.

Abstract

Pre-trained and frozen LLMs can effectively map simple scene re-arrangement instructions to programs over a robot's visuomotor functions through appropriate few-shot example prompting. To parse open-domain natural language and adapt to a user's idiosyncratic procedures, not known during prompt engineering time, fixed prompts fall short. In this paper, we introduce HELPER, an embodied agent equipped with as external memory of language-program pairs that parses free-form human-robot dialogue into action programs through retrieval-augmented LLM prompting: relevant memories are retrieved based on the current dialogue, instruction, correction or VLM description, and used as in-context prompt examples for LLM querying. The memory is expanded during deployment to include pairs of user's language and action plans, to assist future inferences and personalize them to the user's language and routines. HELPER sets a new state-of-the-art in the TEACh benchmark in both Execution from Dialog History (EDH) and Trajectory from Dialogue (TfD), with 1.7x improvement over the previous SOTA for TfD.

In our recently released HELPER-X, we extend the capabilities of HELPER, by expanding its memory with a wider array of examples and prompts, and by integrating additional APIs for asking questions. This simple expansion of HELPER into a shared memory enables the agent to work across the domains of executing plans from dialogue, natural language instruction following, active question asking, and commonsense room reorganization. We evaluate the agent on four diverse interactive visual-language embodied agent benchmarks: ALFRED, TEACh, DialFRED, and the Tidy Task. HELPER-X achieves few-shot, state-of-the-art performance across these benchmarks using a single agent, without requiring in-domain training, and remains competitive with agents that have undergone in-domain training.

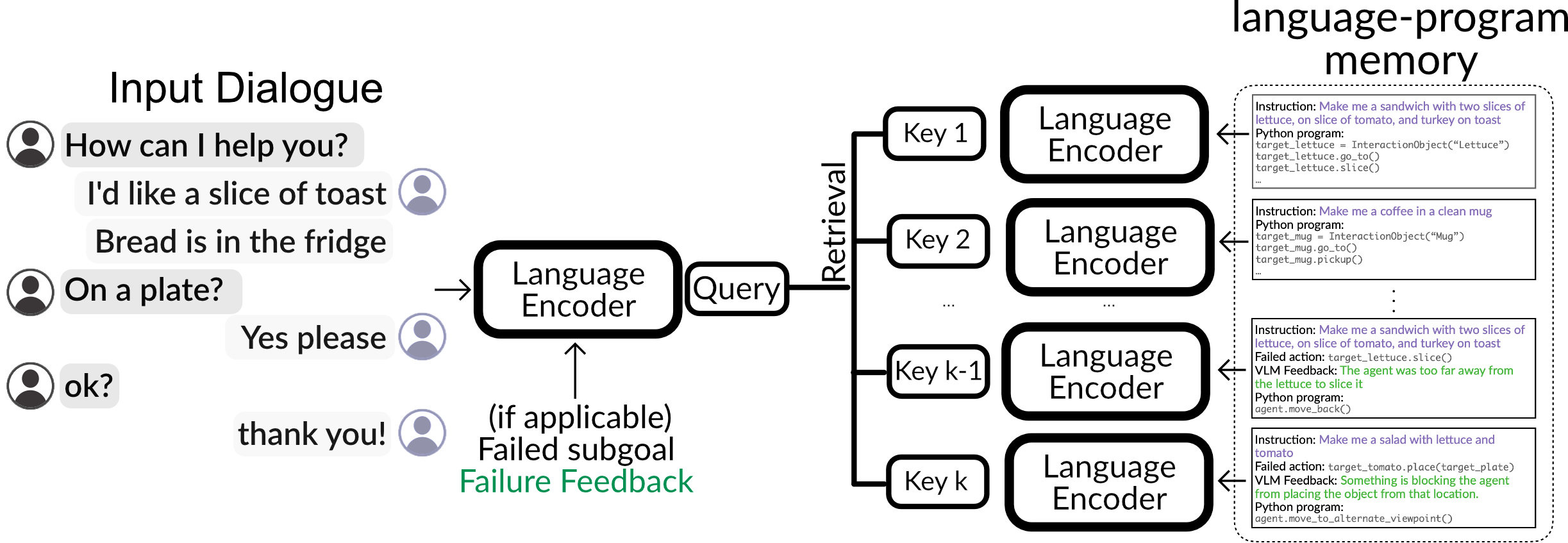

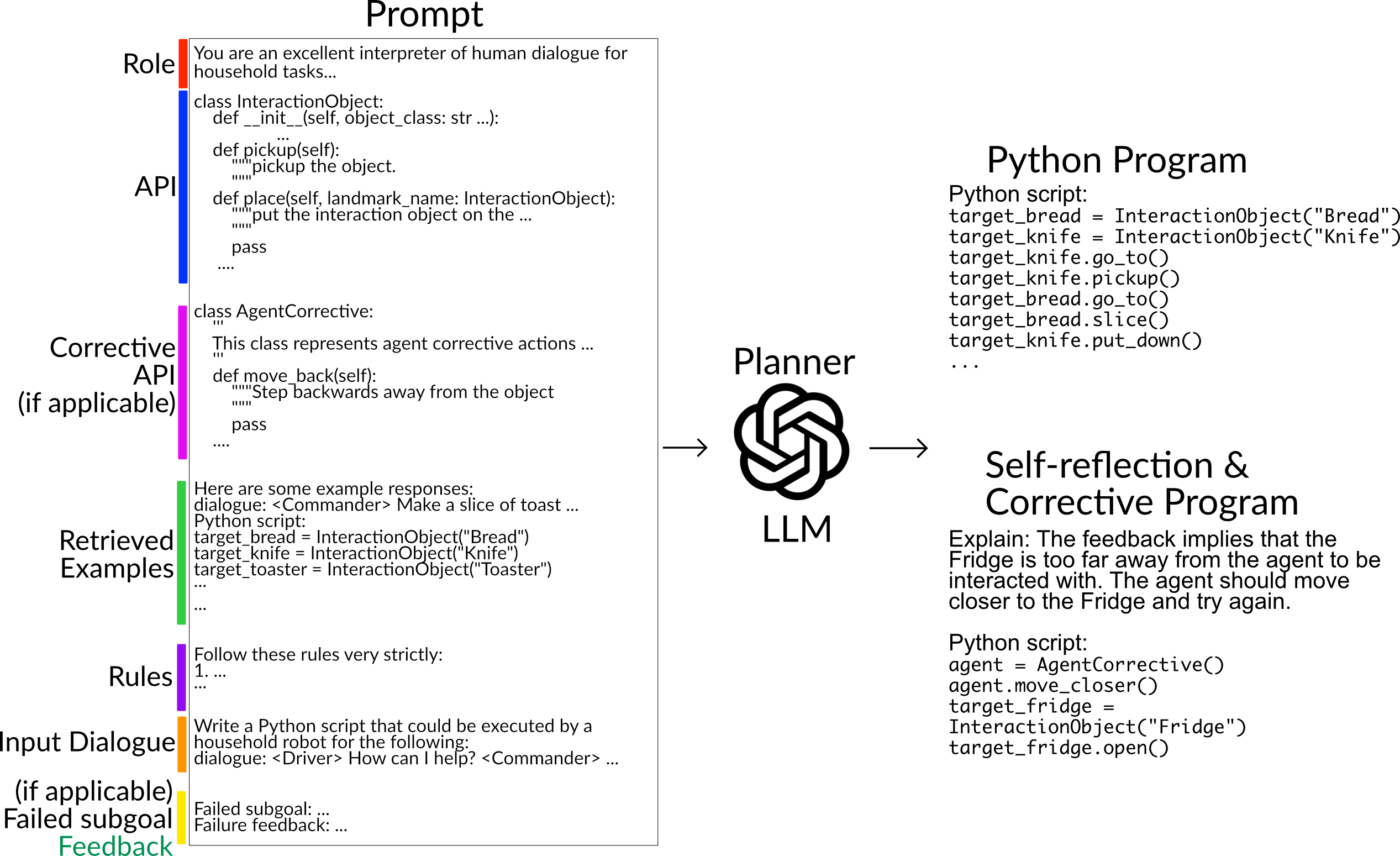

Memory-Augmented Prompting

A key component of HELPER is its memory of language-program pairs to generate tailored prompts for pretrained LLMs based on the current language context.

The retrieved examples are added to the LLM prompt, which aids in parsing diverse, and user-specific linguistic inputs for planning, re-planning during failures, and interpreting human feedback.

Results

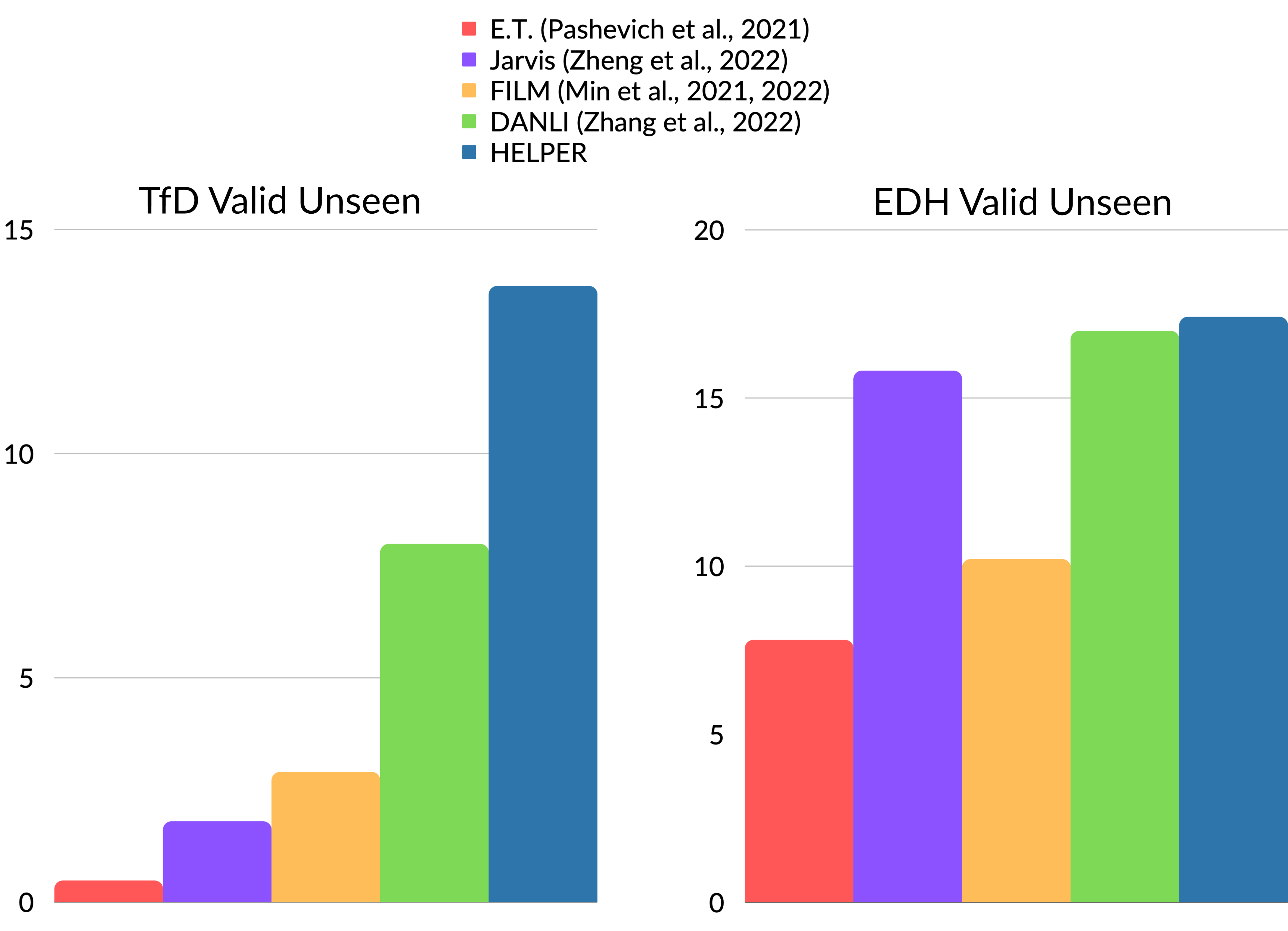

Household Task Execution from Messy Dialogue

We set a new state-of-the-art in the TEACh Trajectory from Dialogue (TfD) and Execution from Dialog History (EDH) benchmarks, where the agent is given a messy dialogue segment and is tasked to infer the sequence of actions from RGB. HELPER improves TfD task success by 1.7x and goal-condition success by 2.1x over existing works with minimal in-domain finetuning.

Task demo

Error correction demo

User Feedback

Gathering user feedback can improve a home robot’s performance, but frequently requesting feedback on a task can diminish the overall user experience. Thus, we enable HELPER to elicit sparse user feedback only when it has completed execution of the program from the initial user input. HELPER improves an additional 1.3X in task success when incorporating just two user feedbacks.

Demo: Clean all cookware with user feedback

(skip to 0:41 for user feedback saying HELPER

missed cleaning the pot)

Demo: Make breakfast with user feedback

(skip to 2:54 for user feedback saying did not put

tomato & lettuce slice on plate)

User Personalization

HELPER expands its memory of programs with successful executions of user specific procedures; it then recalls and adapts them in future interactions with the user, allowing for user-personalized references.

HELPER-X

In HELPER-X, we extend HELPER to work across the domains of executing plans from dialogue, natural language instruction following, active question asking, and commonsense room reorganization.. We propose two versions to extend the memory-augmented prompting of LLMs in HELPER: 1) HELPER-Xp that retrieves from a memory of domain-tailored prompt templates and associated domain-specific examples, and 2) HELPER-Xs that expands the memory of HELPER into a shared memory of in-context examples across domains combined with a domain-agnostic prompt template. Additionally, we extend the capabilities of HELPER for question asking, by appending a question API with functions defining possible questions and their arguments to the LLM prompt.

HELPER-X achieves few-shot, state-of-the-art performance across the four benchmarks using a single agent, without requiring in-domain training, and remains competitive with agents that have undergone in-domain training.

See our papers for more!

Citation

@inproceedings{sarch2023helper,

title = "Open-Ended Instructable Embodied Agents with Memory-Augmented Large Language Models",

author = "Sarch, Gabriel and

Wu, Yue and

Tarr, Michael and

Fragkiadaki, Katerina",

booktitle = "Findings of the Association for Computational Linguistics: EMNLP 2023",

year = "2023"}

@inproceedings{sarch2024helperx,

title = "HELPER-X: A Unified Instructable Embodied Agent to Tackle Four Interactive Vision-Language Domains with Memory-Augmented

Language Models",

author = "Sarch, Gabriel and Somani, Sahil and Kapoor, Raghav and Tarr, Michael J and Fragkiadaki, Katerina",

booktitle = "ICLR 2024 LLMAgents Workshop",

year = "2024"}

Paper

Paper

Code

Code

Tweet

Tweet